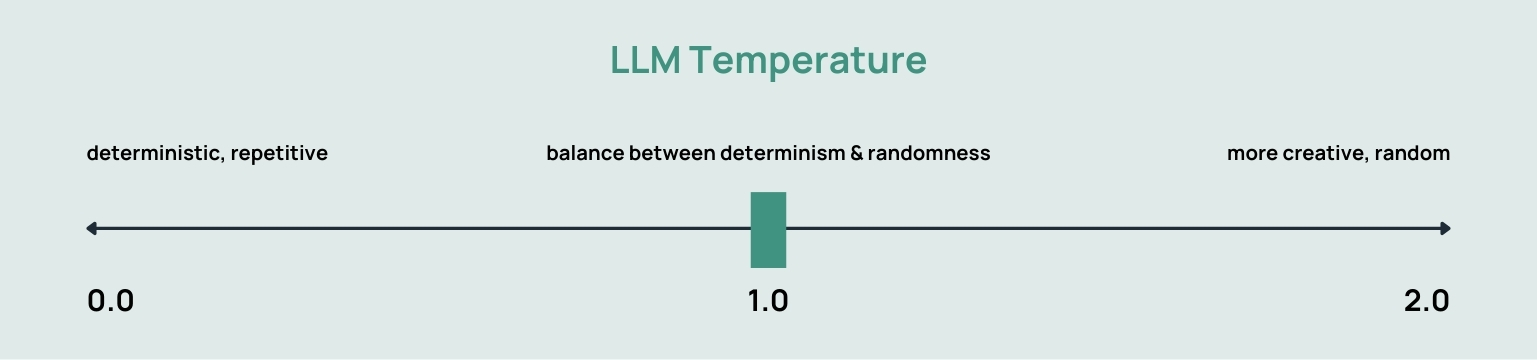

The LLM Temperature setting manages the balance between playing it safe and exploring new possibilities—essentially the trade-off between exploitation and exploration in the model’s output. The Temperature setting dictates whether the results are more random and creative or more predictable. Lower Temperature settings yield higher probability outputs, making the results more predictable. Conversely, higher Temperature settings lead to lower probability outputs, producing more creative results.

When generating text, the model evaluates a range of possible next words or tokens, each with a certain probability. For instance, after the phrase “Once upon a time in a…”, the model might give high probabilities to words like “forest,” “village,” or “castle.”

Effect: Low Temperatures result in more deterministic responses. The model becomes more focused and tends to choose the most probable token at each step.

Characteristics: The output is more repetitive and conservative. It tends to stick to common phrases and patterns. Low-temperature sampling is useful when you want more controlled and coherent responses.

Effect: Medium Temperatures provide a balance between randomness and determinism. The model has some flexibility in choosing tokens, and there’s a mix of likely and less likely choices.

Characteristics: Responses are more diverse and creative compared to low-temperature sampling. This setting is often preferred for generating more interesting and varied outputs.

Effect: High Temperatures introduce more randomness into the generation process. The model is more likely to explore less probable options, leading to more unpredictable and creative responses.

Characteristics: The output becomes more diverse and may include unconventional or unexpected choices. High-temperature sampling is useful when you want to explore a wide range of possible responses or encourage more novelty.

Choosing the right Temperature depends on the specific requirements of your task. If you want more controlled and focused responses, you might opt for a lower temperature. If you’re looking for diversity and creativity, a higher temperature could be more suitable. Experimenting with different temperature values allows you to fine-tune the balance between coherence and randomness in the generated text.

Finding the ideal Temperature for a language model is not a straightforward task and depends on your specific objectives. There’s no universal setting that works for every situation; instead, the best Temperature is determined by balancing factors like coherence, creativity, and task requirements. Here’s a guide to help you choose:

Lower Temperatures (e.g., 0.2-0.5) make the model’s responses more focused and deterministic, favoring accuracy and coherence. Higher Temperatures (e.g., 0.7-1.0) increase creativity and variability, but may reduce coherence.

For complex or nuanced topics, a lower Temperature might help maintain clarity and relevance. For creative tasks or brainstorming, a higher temperature can yield more diverse and novel ideas.

Higher Temperatures can sometimes lead to more verbose or tangential responses. If brevity is important, a lower temperature might be preferable.

Different tasks benefit from different temperatures. For factual queries, a lower temperature is usually better. For generating diverse content or exploring creative solutions, a higher Temperature can be more useful.

Consider who will read or use the output. For technical or formal contexts, lower Temperatures are typically better. For informal or entertainment purposes, a higher Temperature might be more engaging.

Different models may respond differently to Temperature adjustments, so it’s important to experiment and fine-tune based on the specific model you’re working with.

Try different Temperature settings and assess the quality of the outputs. This can be done through user feedback or qualitative evaluation. Be aware that the ideal temperature may change as the context or tasks evolve, so regular adjustments and testing can be helpful.

In some instances, adjusting the temperature specifically for certain tasks or datasets can enhance performance. Train the model on relevant data and modify the Temperature according to the task’s needs.

Achieving the right Temperature setting involves finding a balance—too high can lead to incoherent results, while too low might make the output repetitive. It requires some experimentation to find the optimal setting. Additionally, the quality of your prompts also influences the output. A well-crafted prompt might work effectively with a higher temperature, while a more general prompt could benefit from a lower temperature for a more thorough exploration.

In practical applications, the Temperature setting is selected according to the desired outcome. A higher Temperature is suitable for tasks needing creativity or diverse responses. Conversely, a lower Temperature is preferred for tasks requiring accuracy or factual consistency.

When generating technical documentation, precision and consistency are paramount. A low Temperature ensures that the model produces accurate, factual, and predictable text. This reduces the risk of errors and maintains a consistent tone, making the documentation reliable and easy to follow.

For customer support, a balance between creativity and accuracy is important. A medium Temperature allows the model to generate helpful and varied responses while still maintaining a reasonable level of predictability and correctness. This can enhance user experience by providing relevant and contextually appropriate answers without being overly rigid.

In creative writing or brainstorming sessions, diversity and originality are key. A high Temperature encourages the model to produce more diverse and imaginative outputs, which can inspire new ideas and novel approaches. This setting is ideal for generating unique storylines, brainstorming innovative solutions, or exploring a wide range of creative possibilities.