Top P, also known as nucleus sampling, is a parameter that controls the diversity of the generated responses by limiting the set of tokens considered at each step. Specifically, Top P sampling considers the smallest subset of words whose cumulative probability is at least P. Only words within this subset are considered for the next token generation.

Mechanism:

Here is an example scenario. Suppose a language model is generating the next word after “The cat sat on the”:

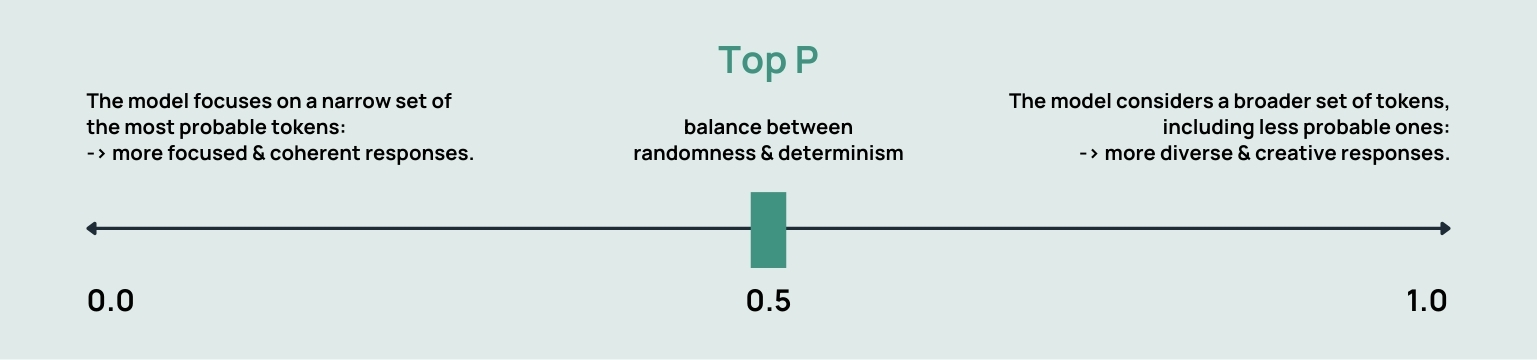

Effect: Low Top P values result in a more deterministic selection of tokens. The model focuses on a narrow set of the most probable tokens, often resulting in more focused and coherent responses.

Characteristics: Responses are likely to be more conventional and safe. The output is constrained to a smaller set of highly probable choices.

Effect: Medium Top P values strike a balance between randomness and determinism. The model has some flexibility in choosing tokens, allowing for a mix of likely and less likely options.

Characteristics: Responses are more varied compared to low Top P settings. There is room for the model to explore different possibilities while still maintaining a degree of coherence.

Effect: High Top P values introduce more randomness into the token selection process. The model considers a broader set of tokens, including less probable ones, leading to more diverse and creative responses.

Characteristics: Responses are likely to be more unpredictable and unconventional. High Top P settings are useful when you want the model to generate more novel and imaginative outputs.

Choosing the right Top P value depends on your specific use case. Lower values may be suitable for tasks where you want more control and coherence, while higher values can be beneficial for tasks where creativity and diversity are desired. Experimenting with different Top P settings allows you to fine-tune the balance between deterministic and random elements in the generated text.

Suppose a language model is generating the next word after “The cat sat on the”:

Candidate Pool: { “mat”, “couch”, “roof”, “sofa”, “fence”, … }

Output: “The cat sat on the roof.” (more varied and creative)

Candidate Pool: { “mat”, “couch”, … }

Output: “The cat sat on the mat.” (more predictable and coherent)

High Coherence Needs:

High Creativity Needs:

Top P (nucleus sampling) is a powerful technique in language models for balancing text diversity and coherence. By adjusting the threshold P, users can fine-tune the model’s output to meet specific requirements, from highly creative and varied to more predictable and consistent text. Understanding and leveraging Top P allows for more nuanced control over language model behavior, enhancing the quality and relevance of generated content.

Top P (Nucleus Sampling): Controls the diversity of text by sampling from the smallest set of top-probability words whose cumulative probability reaches P.

Temperature: Adjusts the randomness of predictions. Higher temperature values (closer to 1) produce more random and creative outputs, while lower values (closer to 0) generate more deterministic and focused text.

Frequency Penalty: Reduces the probability of a word based on its frequency in the text to discourage repetition and promote diversity.

Presence Penalty: Reduces the probability of a word if it has already appeared in the text, encouraging immediate variety in word choice.

Combining Top P with other techniques like temperature, frequency penalty, and presence penalty allows for fine-tuning the output of language models to meet specific requirements. By balancing these parameters, you can achieve the right mix of coherence, creativity, and diversity, leading to more effective and tailored text generation. Regular experimentation and feedback are key to optimizing these settings for different tasks and contexts.